Tales from the Hunt: A Journey Through Repository Poisoning

In August 2025, the NX package on npm was compromised[4]. No version anomalies. No typosquatting. No obvious red flags that traditional security tools would catch. The attack only became visible during execution—when it harvested NPM tokens, GitHub keys, and SSH credentials. By then, it had already spread through CI/CD pipelines into production systems.

This is the new reality of repository poisoning. Attackers don’t break down your defenses—they walk through the front door using credentials you trust. Every modern application depends on package managers, such as npm, PyPI, and RubyGems. These are trusted infrastructure, essential for development and deployment. Security tools don’t flag package installations because they are considered expected behavior.

But attackers have weaponized this trust. A package named “reqeusts” exists on PyPI with thousands of downloads. Not “requests”—”reqeusts”. One letter off. Version 99.99.99. It’s not a typo; it’s a Trojan hiding in plain sight. These attacks have evolved from manual typosquatting to self-replicating, AI-powered registry attacks that spread across hundreds of packages[2], with phishing-based compromises affecting packages that receive billions of downloads weekly [1].

How do you distinguish malicious packages from the thousands of legitimate ones flowing through your environment daily? How do you catch attacks that use trusted channels and legitimate functionality?

Through behavioral patterns. While investigating repository poisoning, eight distinct patterns have emerged that consistently reveal these attacks—patterns that remain invisible to traditional security tools but become obvious once you know what to look for.

The Eight Patterns: A Roadmap

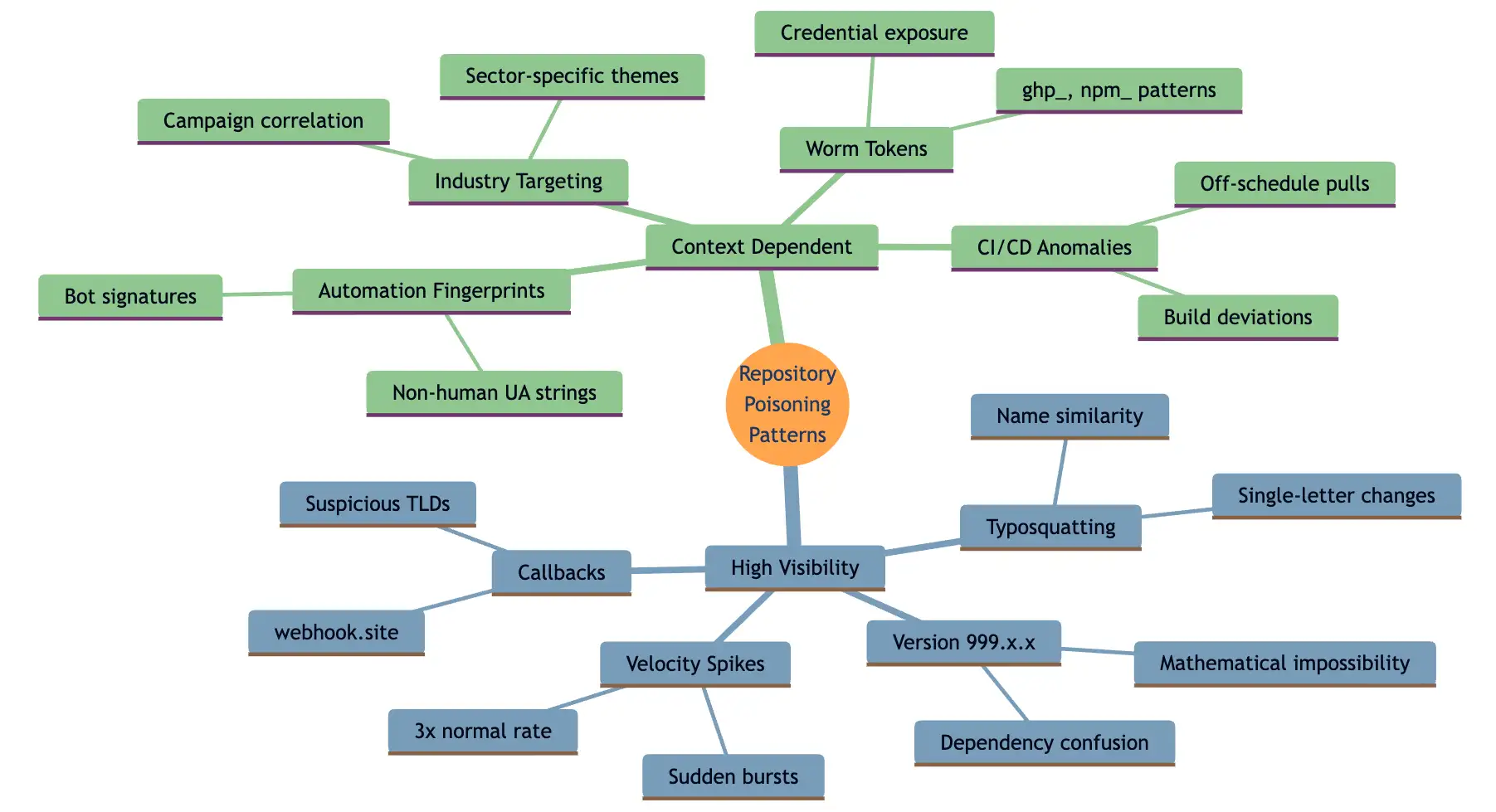

How to read this map: The eight patterns are split into two categories based on observability. High-visibility patterns are readily apparent in network traffic and can be quickly verified. Context-dependent patterns require baseline knowledge, threat intelligence, or system metadata to validate. Each pattern below reveals specific behavioral indicators that distinguish malicious packages from legitimate developer activity.

Hunter’s Log: Eight Patterns from the Field

The following patterns emerged from tracking and analyzing current repository poisoning campaigns across the threat landscape.

Entry 1: The Version Number Anomaly

The first pattern that catches my attention is always version numbers. No legitimate package jumps to version 999.999.999. Research has demonstrated that attackers exploit package managers’ preference for higher version numbers to hijack internal package names. When package managers see a public package with version 999.0.0 and an internal package at version 1.2.3, they “upgrade” to the attacker’s version. It’s mathematically improbable in normal development, making these extreme versions a clear inbound indicator of dependency confusion attacks.

Entry 2: The Typosquatter’s Game

Package names tell stories. “reqeusts” instead of “requests”, “nupmy” instead of “numpy”—these aren’t accidents. They’re deliberate traps for tired developers and automated systems. These typosquatted packages accumulate downloads surprisingly quickly, all of which is inbound traffic from victims who made a simple mistake or whose automation had a typo in its configuration. The pattern is consistent: popular package names with single-letter variations, number suffixes like “lodash-2”, or creative misspellings that pass casual inspection.

Entry 3: Automation’s Fingerprint

Machines and humans behave differently. When I see user agents like “SSLoad/1.1” or rapid-fire package pulls—dozens of packages in minutes—it’s almost always not human. These automation fingerprints are bidirectional indicators; they can signal bot activity, such as downloading or uploading packages. The key is velocity: humans don’t typically download massive numbers of packages in short timeframes. This pattern becomes especially concerning when combined with other indicators, as it often represents automated attack tools or compromised CI/CD systems.

Entry 4: The Velocity Spike

Normal package installation has a rhythm. Development teams have established patterns—such as sprint deployments, regular updates, and predictable cycles. However, when I observe velocity anomalies, such as three times the normal rate or sudden bursts of package downloads, it just piques my curiosity. These inbound spikes often indicate the propagation of worms or mass infection attempts. The pattern is distinctive: instead of the gradual increase you’d see with a deployment rollout, these spikes appear suddenly and intensely.

Entry 5: Industry-Specific Targeting

Certain APT campaigns use themed packages to target specific sectors. Aerospace companies see aerospace-themed packages. Crypto firms encounter blockchain utilities. Now we’re seeing AI/ML-themed packages targeting the machine learning pipeline. These inbound attacks require threat intelligence enrichment to be fully understood—package names alone may not reveal the targeting without context about current campaign themes. If your industry is trending in the news or experiencing rapid growth, you’re likely seeing themed attacks, in my opinion.

Entry 6: The Self-Replicating Nightmare

Worm behavior has distinct patterns. Token patterns in URLs—”ghp”, “npm”, credentials exposed in requests—combined with repetitive package names and massive distribution velocity. Recent worm variants harvest credentials to auto-publish malicious versions, creating a bidirectional pattern: harvesting tokens outbound, spreading packages inbound. The repetitive nature of these patterns, like echoes in the data, makes them visible even without knowing the specific worm variant.

Real-world validation: The August 2025 NX package compromise[4] illustrates the importance of observing token patterns. While the initial compromise bypassed typical supply chain indicators—no version anomalies, no typosquatting—the attack became visible during execution when it harvested NPM tokens, GitHub keys, and SSH credentials. Pattern #6 captures this reconnaissance phase, while Pattern #8 captures the subsequent exfiltration to attacker-controlled GitHub repositories.

Hunting caveat: This pattern relies on token visibility in network traffic. If attackers encrypt credentials before transmission or exfiltrate via HTTPS POST bodies without tokens in URLs, pattern observation becomes blind. Local-only reconnaissance without network activity won’t trigger this pattern either—you’d need endpoint telemetry (process execution, file access) to catch those types of cases. I advise you to layer your hunting approach accordingly.

Entry 7: Build System Compromise

CI/CD systems are crown jewels, and attackers know it[3]. When build systems start downloading unusual packages, it’s an inbound pattern that could affect every deployment. The challenge is that CI/CD traffic often blends with normal development activity. You need context—a baseline understanding of what your build systems typically do—to spot anomalies. But when you see them, the risk is exponential: one poisoned package in CI/CD impacts everything downstream.

Entry 8: The Callback Trail

Post-compromise activity leaves traces. Callbacks to the webhook.site, Discord webhooks, or suspicious domains with TLDs like .tk or .ml—these outbound patterns indicate potential exfiltration. While most patterns focus on packages coming in, this one monitors data being sent out. It’s often the first visible sign that a package has executed malicious code, making it a critical observation point even if you missed the initial infection.

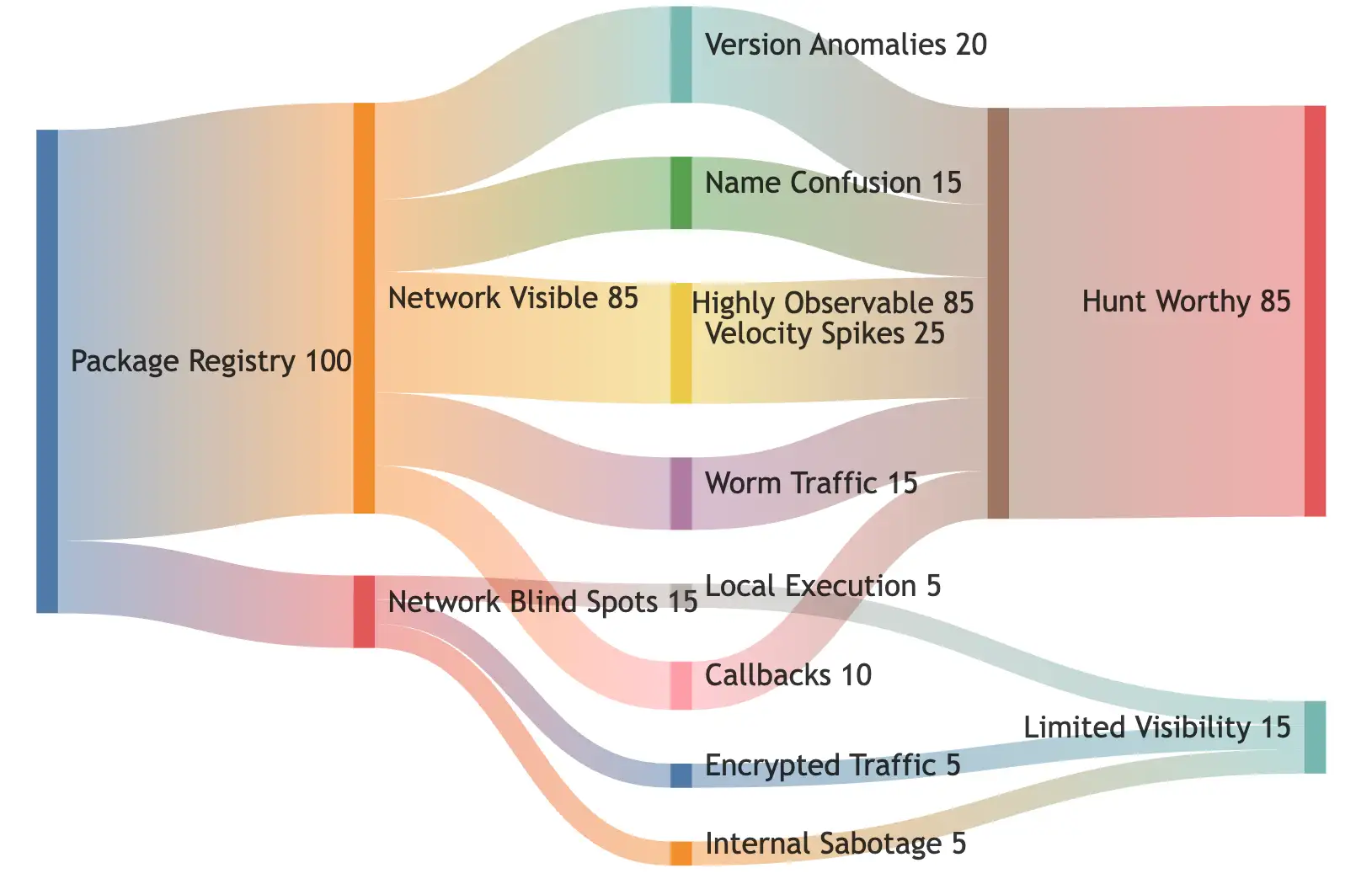

The Struggle: Observability Reality Check

Here’s what might keep you up at night: we can only see what flows through our network traffic monitoring—and unfortunately, not all patterns are equally visible.

Reading the flow: This diagram reveals the hunting reality—85% of repository poisoning patterns leave observable traces in network traffic, creating opportunities for hunting. The remaining 15% hit blind spots where network monitoring fails, including local execution after package download, encrypted command-and-control channels, and insider sabotage, such as the Colors/Faker incident[5]. Your hunting effectiveness depends on understanding which patterns flow through observable channels and which require alternative telemetry sources, such as endpoint monitoring or code repository auditing.

High-Visibility Patterns

Highly Observable in Network Traffic:

- Version Anomalies (999.x.x): Crystal clear in network logs when packages with extreme versions are downloaded.

- Typosquatting: Package names in URLs are logged—”reqeusts” vs “requests” is obvious.

- Velocity Spikes: Mass downloads create unmistakable traffic patterns.

- Worm Propagation: Repetitive, automated requests stand out in flow analysis.

- Callback Traffic: Webhook.site and similar endpoints are red flags in outbound logs.

Patterns Requiring Context:

Observable with Additional Data Sources:

- Automation Patterns: Only visible if user-agent strings are captured.

- CI/CD Exploitation: Blends with normal development traffic without build metadata.

- APT Themes: Requires threat intelligence enrichment to identify sector-specific targeting.

Observability Gaps:

Limited or No Visibility:

- Local package execution after download

- Encrypted command & control channels

- Internal maintainer sabotage (Colors/Faker style[5])

- Side-loading and local repository changes

The harsh reality? Network monitoring catches the obvious attacks but misses the subtle ones. I repeat, layer your hunting approach accordingly.

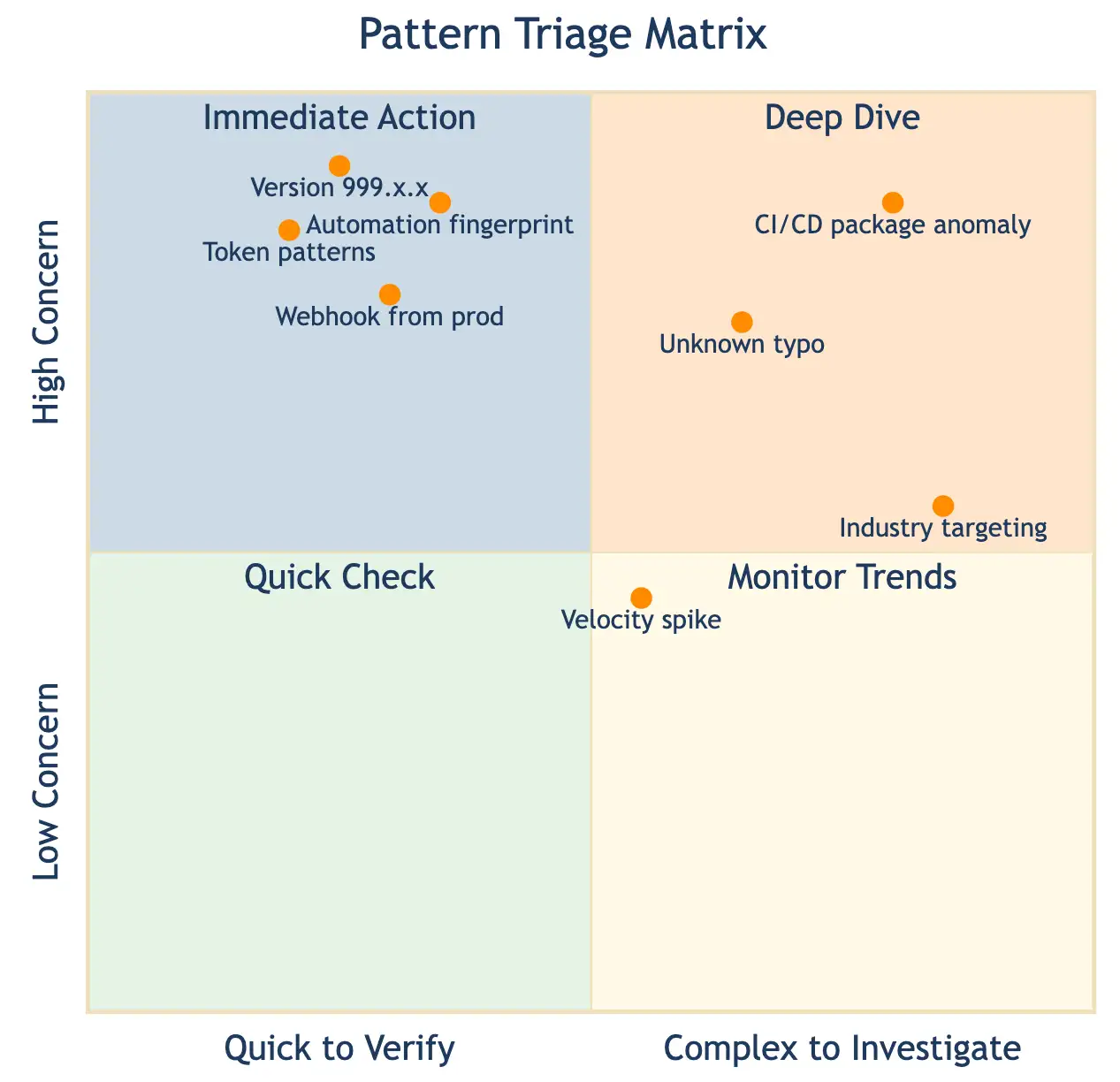

Pattern Triage Matrix

When a pattern surfaces during your hunt, the first question is: “How urgent is this?” Not all patterns require immediate action, and not all demand in-depth analysis. This matrix helps you categorize patterns based on two dimensions: threat probability (the likelihood that the threat is malicious) and investigation complexity (the difficulty of verifying the threat).

How to use this triage matrix when hunting:

When a pattern shows up in your data, find its location on the chart to decide what to do next. Patterns higher up are more likely to be real threats. Patterns further to the right require more investigation.

- Top-right (Deep Dive): There is a high chance it’s serious and complex to confirm, requiring a thorough and detailed investigation.

- Top-left (Immediate Action): Likely a real threat that’s easy to verify, respond right away.

- Bottom-left (Quick Check): If any existed, these would be of low concern and simple to check, review quickly, and then move on.

- Bottom-right (Monitor Trends): Low concern, but harder to check, watch over time to spot changes or developing threats.

Why this matters:

Many of the highest-risk patterns (like impossible version numbers, exposed tokens, automation footprints, or unexpected webhooks) end up in the Immediate Action area. Attackers can’t hide when these obvious signs appear, making quick triage possible. Other patterns require additional business or technical context (e.g., supply chain anomalies or new industry targeting), so they are assigned to quadrants that necessitate extra analysis. Utilizing these priorities helps prevent wasted effort and ensures prompt action for genuinely urgent threats.

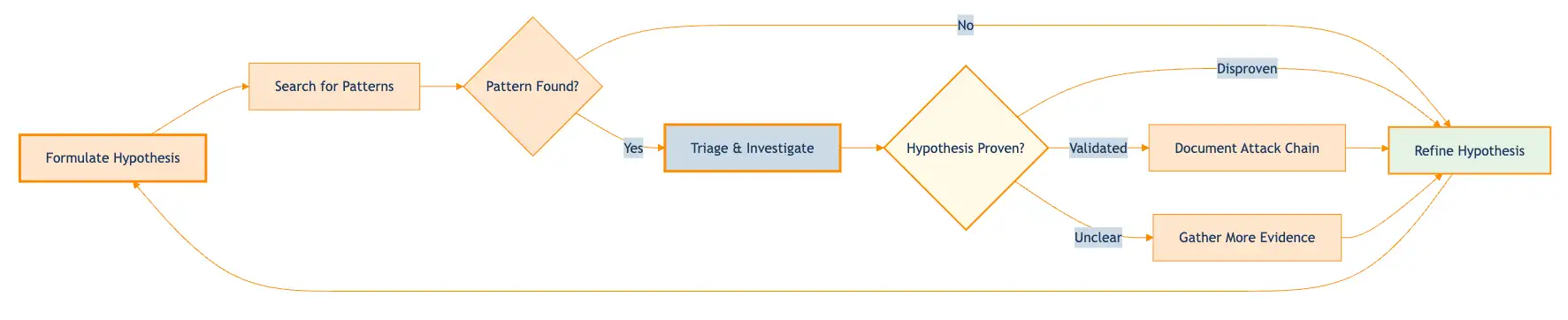

The Hunt Loop: Testing Your Hypothesis

Threat hunting is an iterative process of hypothesis testing, not a linear investigation. You formulate a hypothesis (“Our environment has dependency confusion attacks”), search for patterns, and test whether evidence proves or disproves your theory. The loop continues: validated hypotheses become documented findings, disproven theories refine your next hypothesis, and unclear results drive deeper evidence gathering.

The simplicity of this loop is intentional—the complexity resides in the “Triage & Investigate” step, which varies based on the pattern detected and the context of your environment. That’s where the Hunt Decision Reference below becomes essential.

Hunt Decision Reference: From Pattern to Action

The Hunt Loop shows the process, but when you’re mid-investigation, you need immediate answers: “What do I check? What does ‘baseline’ mean for THIS pattern? What indicates correlation?” It’s my hope that this reference table helps you translate each pattern into something actionable.

| Pattern Detected | Triage Category | Investigation Questions | What to Check |

| Version 999.x.x | Immediate Action |

|

|

| Token patterns(ghp, npm, etc.) | Immediate Action |

|

|

| Automation fingerprint(SSLoad/1.1, etc.) | Immediate Action |

|

|

| Webhook callback(webhook.site, .tk, .ml) | Immediate Action |

|

|

| CI/CD package anomaly | Deep Dive |

|

|

| Unknown typo package(reqeusts, nupmy) | Deep Dive |

|

|

| Velocity spike(3x normal rate) | Monitor Trends |

|

|

| Industry targeting(aerospace, crypto, ML themes) | Deep Dive |

|

|

Now, let’s talk about the specific false positives that will consume your hunting cycles if you’re not prepared:

Hard-Learned Lessons About False Positives

Over the course of months, refining these hunting patterns, I’ve encountered numerous false-positive scenarios. Let me share what I’ve learned to save you time:

Sprint Deployments Will Look Suspicious

Mass package updates during sprints can resemble attacks. It would be wise to check with that team’s deployment calendars first before escalating the issue.

Developers Test Weird Things

That webhook.site callback at 2 AM? It could be a developer testing. But verify anyway, this also provides good cover for real attacks.

Your Internal Package Names Matter

Internal packages with names similar to public packages will frequently surface during hunts. This is the dependency confusion attack vector, where package managers prefer public registry versions over internal ones. Consider using unique naming conventions, if you aren’t already, with company-specific prefixes to avoid namespace collisions in the registry.

Global Teams Break Time-Based Patterns

“Suspicious after-hours activity” loses meaning with distributed teams pulling packages across time zones. At this point, I consider timezone distribution and global registry access patterns before investigating temporal anomalies in package downloads.

CI/CD Systems Are Chatty

Automated dependency updates create velocity spikes that look suspicious. Documenting automation schedules and understanding your build systems’ normal registry pull patterns can significantly reduce false positives.

Legacy Systems Do Weird Things

Older systems using outdated package managers may pull packages without version pinning, use deprecated registries, or have unusual dependency resolution behaviors. When you encounter these oddities, try to keep notes on known package manager quirks for each system.

The Bottom Line

Repository poisoning succeeds because the infrastructure is inherently trusted, the activity mimics legitimate behavior, and traditional security tools are not in place to monitor it. By the time you catch it, execution often already occurred.

However, these hunting theories work because attackers can’t hide the numbers; extreme versions, unusual velocities, and pattern frequencies create statistical fingerprints. Certain behaviors remain universally suspicious, and context often reveals the intent behind them. The patterns persist across campaigns.

These eight theories aren’t perfect. They’ll surface false positives. You’ll investigate legitimate activity. But they’ll also catch attacks that no signature-based system ever could.

Because in the world of supply chain attacks, you’re not hunting malware, you’re hunting patterns that just don’t add up.

References

[1] Birsan, A. (2021). “Dependency Confusion: How I Hacked Into Apple, Microsoft and Dozens of Other Companies.” Medium. Retrieved from https://medium.com/@alex.birsan/dependency-confusion-4a5d60fec610

[2] Sonatype. (2024). “State of the Software Supply Chain Report – 10 Year Look Back.” Retrieved from https://www.sonatype.com/state-of-the-software-supply-chain/2024/10-year-look

[3] OWASP. (2024). “CICD-SEC-3: Dependency Chain Abuse.” OWASP Foundation. Retrieved from https://owasp.org/www-project-top-10-ci-cd-security-risks/CICD-SEC-03-Dependency-Chain-Abuse

[4] Deepwatch Labs. (2025). “NX Breach: A Story of Supply Chain Compromise and AI Agent Betrayal.” Retrieved from https://www.deepwatch.com/labs/nx-breach-a-story-of-supply-chain-compromise-and-ai-agent-betrayal/

[5] Fossa. (2022). “Open Source Developer Sabotages npm Libraries ‘Colors,’ ‘Faker'” Retrieved from https://fossa.com/blog/npm-packages-colors-faker-corrupted/

↑

Share