Struggling with Alert Fatigue, Dirty Logs, and Useless Reports in Splunk?

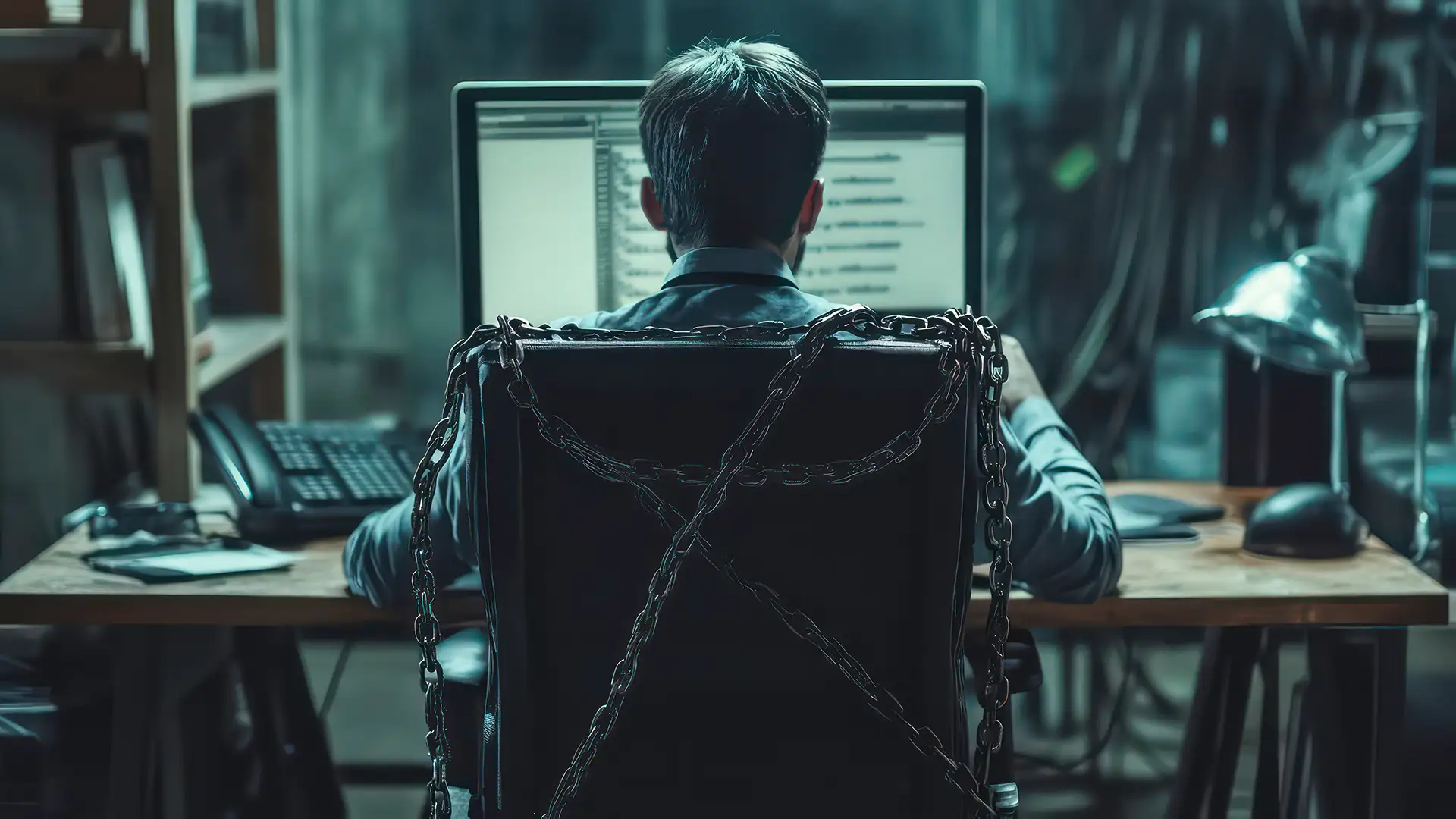

Splunk is one of the most powerful tools in the security stack, but too often, it’s also one of the noisiest, most chaotic, and hardest to maintain.

Security teams spend more time tuning, triaging, and troubleshooting than detecting and responding. And for lean teams, that tradeoff can quietly undermine the value of your entire SIEM investment.

If you’re experiencing alert fatigue, log ingestion chaos, or weak reporting from your Splunk environment, you’re not alone, and you’re not doing it wrong. You’re dealing with real operational limits that the tool alone can’t solve.

1. Alert Fatigue: When “More Data” Becomes Too Much

Splunk’s flexibility is a blessing and a curse. It can ingest nearly anything but quickly becomes a firehose without tight use case mapping and rule tuning.

- Dozens or hundreds of false positives every day

- Overlapping correlation rules triggering duplicate alerts

- Fatigued analysts triage false positives instead of hunting real threats

This kind of fatigue doesn’t just slow down response; it creates blind spots. Real incidents get missed, not because of tech failure, but because the signal is buried in noise.

Business impact: Delayed or missed detection of real threats, prolonged dwell time, and increased risk of breach. Even worse, executives often have no visibility into these gaps until after damage is done.

2. Bad Data Hygiene: When Logs Cost You More Than They Help

In theory, more logs = better visibility. In reality?

- Logs are often unparsed or miscategorized.

- Ingestion costs spike with no added detection value.

- Security teams waste time chasing meaningless artifacts.

Without consistent log source onboarding, normalization, and enrichment, Splunk becomes bloated, and your team burns time sifting through noise instead of focusing on threat signals.

Business impact: Ballooning Splunk license costs, wasted security team’s hours, and a false sense of coverage. You pay more without actually seeing more.

3. Poor Reporting: When You Can’t Tell the Story That Matters

You’ve got dashboards. Maybe even daily metrics. But if your reporting doesn’t:

- Demonstrate a reduction in real risk.

- Align with threat frameworks such as MITRE ATT&CK.

- Assist leadership in understanding detection coverage and response maturity.

…then you’re collecting data without converting it into trust.

Business impact: Inability to communicate security effectiveness to the board, lost credibility with leadership, and misaligned priorities across the org. Reporting becomes noise, not insight.

At This Point, You’ve Got a Few Options: How to Deal with Your Splunk Challenges

If your team is buried in Splunk noise and struggling to deliver meaningful outcomes, the choices boil down to three realistic paths:

1. Try to Fix It In-House

You could dedicate internal resources to re-tuning alert rules, cleaning ingestion pipelines, and rebuilding reporting. But that assumes you have the time, headcount, and Splunk expertise to spare, on top of everything your team is already managing.

Operational cost:

- Diverts the team from threat response and strategic work

- Requires ongoing tuning, not just a one-time cleanup

- Likely leads to burnout or delay in projects.

Business impact:

Headcount expansion or consultant spend is just to tread water. You’ll likely reduce some pain, but risk recreating it in six months when things drift again.

2. Let the Problems Pile Up

The easiest option is to do nothing and hope your team can keep things running despite the noise. This is the default path for many overwhelmed organizations. But when false positives dominate and real signals get buried, you’re not just inefficient but exposed.

Operational cost:

- Analysts spend hours chasing noise.

- High-value detections may be overlooked entirely.

- Reports become more difficult to trust and defend.

Business impact:

Delayed or missed incident response, increased dwell time, and risk of leadership losing confidence in your detection program. In some cases, real attacks go undetected until it’s too late.

3. Bring in Experts Who Do This Every Day

There’s a middle path in Splunk optimization: keep your tools and stay in control. From tuning and ingestion cleanup to better alert logic and real reporting, expert support turns your SIEM from a source of noise into a source of value.

Operational benefit:

- Faster time to improvement

- Proven playbooks and use case coverage

- Offloads the work without giving up visibility

Business impact:

Lower internal burden, better detection fidelity, and reporting to your CISO and board can be acted on. All without switching platforms or building an internal SOC of your own.

Talk to a Team That Helps Security Orgs Clean Up Splunk Every Day

If alert fatigue, ingestion chaos, maintenance and dashboards, and reporting pressure waste your team’s time, you don’t need to remove anything. You just need help getting control.

Deepwatch helps security teams manage and optimize their Splunk environments, allowing them to finally focus on threats instead of tool overhead. Talk to an expert at Deepwatch.

We’ll show you how other teams achieve faster, cleaner, and more useful outcomes without adding headcount or changing platforms.

↑

Share